Back in October, at New York Advertising Week, suddenly all my meetings were about digital twins. Remember when consumer research used to be so expensive and time-consuming? folks were asking me. Not anymore. Now we have robots to take your survey.

Digital twins are no longer mere concept--they have entered my commercial reality.

It’s an intriguing proposition! Get a sample of men 35-49. Ask them questions. Infer their characteristics--call it, distaste for authority, and openness to new ideas--and let your modeled male 35-49 take your survey. Research might become so cheap, you could pop out questions to a panel of robots like you were firing off a one-line Slack. Orange or cherry? Vinyl or leather?

And just as I was digesting these developments, a Stanford computer science team--led by a baby-faced PhD candidate named Joon Sung Park--announced they had created models that can replicate actual people and their personalities.

Not a robot version of the cohort “men 35-49.” Like, a robot version of 38-year-old Harry Smith of 100 Elm Street, Decatur, Georgia.

The Stanford team had created replicas of one thousand individuals.

I, NOT-ROBOT

How it worked, is like this: Park and his team paid actual people a hundred bucks to take a battery of personality tests… values tests (“if a man and a woman have sexual relations before marriage, do you think it is always wrong?”)… play economic games like the so-called “dictator game” invented by the late Nobel-ist Daniel Kahneman… and provide detailed demographics. Then each subject was interviewed for two hours. (The interview conducted, naturally, by an AI.)

The interviews get pretty real:

[Interviewee]: So I had a head injury while I was in elementary school and the full weight of that untreated injury came to bear when I was in about 8th grade and I started having these really bad mood swings. So I spent most of my high school years in deep depression and no one got me any help for it. It was pretty awful.

I mean, this is the stuff of sleepovers and weird third dates.

Then the Stanford team tested the robot versions of all their subjects with another battery of quizzes—to see how closely the robot answers matched their human originals.

Illustration from “Generative Agent Simulations of 1,000 People” by Park et al.

The results were not perfect. But they were pretty goddam close. The robot versions answered questions with 85% accuracy to human “original.”

And this, of course, made me think of my favorite Victorian gothic novel, The Picture of Dorian Gray.

DORIAN GRAY vs. FRANKENSTEIN

In Oscar Wilde’s novel, a pretty young London bachelor named Dorian Gray sits for a portrait. He learns his picture has strange powers: if Dorian does nice things, his picture looks beatific. If he does wicked things, the picture’s face turns demonic.

Wilde’s story is a great illustration of one aspect of the digital twin race.

In this domain, a digital twin is merely a replica. It exists so we can ask it questions, poke it, prod it, test things on it.

And we can do all this at the merciless pace of technology--testing thousands of variations quickly, and of course, cheaply. The virtual Harry Smith never gets tired of answering questions. And you only had to pay the real Harry Smith $100, once, to model him.

Just like Dorian Gray got to do unspeakable things down at the docks every night, and always looked like a million bucks in the morning--because his portrait version paid the price.

There is a fork in the road of digital twins. The first fork is Dorian Gray. To understand the second fork, let’s stick with gothic novels from the 1800s--I mean, why wouldn’t we--and call it the Frankenstein version.

On this second fork, Frankenstein’s monster was created to think, feel, and act on his own. This is not a digital twin, but a digital double. Or--as they are increasingly being called--an agent.

Dorians are replicas for testing and learning.

Frankensteins are agents for acting on our behalf.

First framework for applying digital twins to manufacturing, presented by Michael Grieves in “Origins of the Digital Twin Concept,” 2016

Today, we will stick with the Dorians. Because Dorian Gray illustrates the three essential components of a digital twin.

First you need a physical original. (The bachelor)

Second, you need a virtual double. (The painting)

Third, you need data to pass back and forth between the two--which is where the learning happens. (Testing the effect of moral and immoral behavior on the painting)

LIVER ON A CHIP

The digital twin becomes more useful, and more powerful, when you operate in an expensive, complex environment, where your time is very short, or where your cost of failure is high.

For Dorian Gray, the cost of losing his reputation in judge-y Victorian London would have been catastrophic. For some non-fictional examples--NASA, BMW, the America’s Cup, and the drug industry--the stakes are equally high.

Take spacecraft. No system is more complex, or high stakes, than manned space flight. NASA and the Air Force started collaborating on aircraft, using digital twinning, as far back as 2012.

You create your physical version of the aircraft. You create a digital version--with systems for airframe, propulsion and energy storage, life support. Then you test it, and measure its IVMH, or integrated vehicle health management data. How does the virtual version handle extreme loads? Extreme heat?

Space craft development = expensive environment.

On an automotive factory floor, the interaction with all the workers, the use of space, is impossibly complex. How close to the assembly line should the rack of parts be, so your worker can reach them and install them easily? What if “easily” saves you thousands of hours of labor, in aggregate, every month?

BMW created a virtual factory. Its managers and workers played it like a video game, testing different manufacturing tactics (move the parts rack a foot closer and six inches higher). It was easier than futzing with the whole real assembly line.

Manufacturing = complex environment.

When the Emirates New Zealand sailing team wanted to design a sailing craft in time for the 2021 America’s Cup, they had eighteen months. So they taught a simulator to sail. (Of course they did.) Then they tested thousands of design tweaks to the sailboat.

Imagine how laborious and time-intensive it would have been to build a hydrofoil two feet high, two feet two inches high, two feet three inches high, installing it, testing it by getting the whole crew out on the water, holding conditions identical, each time? And still having time to manufacture the final design? Using a digital twin, they finished on deadline. (And yes, they won.)

Sports + manufacturing + deadline = extreme time sensitivity.

My favorite example is closer to Dorian Gray. Only instead of testing human morality on a portrait, we test drugs--on a human organ on a chip.

A company called Emulate uses polymers, collagen, UV radiation and a cocktail of human liver cells to create virtual livers for drug companies to test on. No more animal testing. You can test your drug on a few hundred synthetic Liver Chips; spare a generation of mice; and presumably, accelerate more quickly past the actual human suffering incurred in clinical trials when the drug goes wrong and turns your eyes yellow.

Testing on living creatures = high cost of failure.

And yes, Emulate also makes a Brain Chip. “It can be created,” goes the marketing material, “using a Basic Research Kit and a user’s own cell sources.”

The question is: can it do my expenses for me?

All of which takes us back to Stanford, and the 1,000 virtual humans.

THROUGH THE LOOKING GLASS

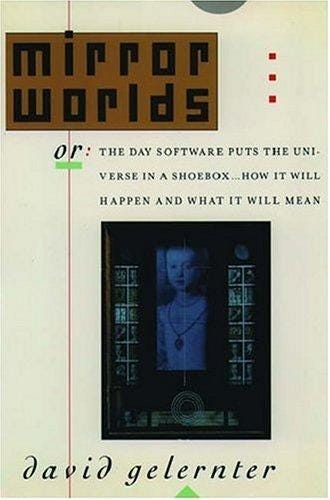

The first mention of the idea of digital twins is credited to a computer scientist named David Gelernter, who wrote a mind-blowing book in 1992 called Mirror Worlds.

Mirror Worlds? What are they? They are software models of some chunk of reality, some piece of the real world going on outside your window. Oceans of information pour endlessly into a model (through a vast maze of software pipes and hoses): so much information that the model can mimic the reality’s every move, moment-by-moment.

Is this a terrifying idea? It is. So terrifying that the notion took possession of a paranoid, isolated Harvard graduate named Ted Kaczynski, also known as the Unabomber.

Kaczynski sent a mail bomb to Gelernter, then a professor at Yale. The bomb blew up the young professor’s right hand and blinded his right eye. Gelernter survived. So did his ideas.

As usual with advances in data and AI, there is a glorious, and a ghastly, version of developments.

On the glory side, with the ability to test concepts on thousands of virtual people, we can play out (for instance) swift, shrewd models of financial policy… social program testing… consumer research… and thus can accelerate the speed of human innovation.

On the ghastly side—well, what if some autocrat could do cheap, easy mass experiments on human emotions? Using a million-“person” panel of Jong Sung Park’s creation, it could become a form of mind control.

Thirty years later--and wiser, surely; Joon Sung Park told the MIT Technology Review he spent “more than a year wrestling with ethical issues” before completing this latest research--we enter Gelernter’s Mirror World.

Only, maybe I’ll ask my digital twin to go first.

Wow: this is fascinating, and more than a little unsettling (also: hi!)

Hi Justin, Cool post. I thought you might want to take a look at this article. An interesting different take on a similar topic. https://drive.google.com/file/d/1XVQ2ReOZLDjeHYQIsOi2kWpfQP8EyTQa/view?usp=sharing