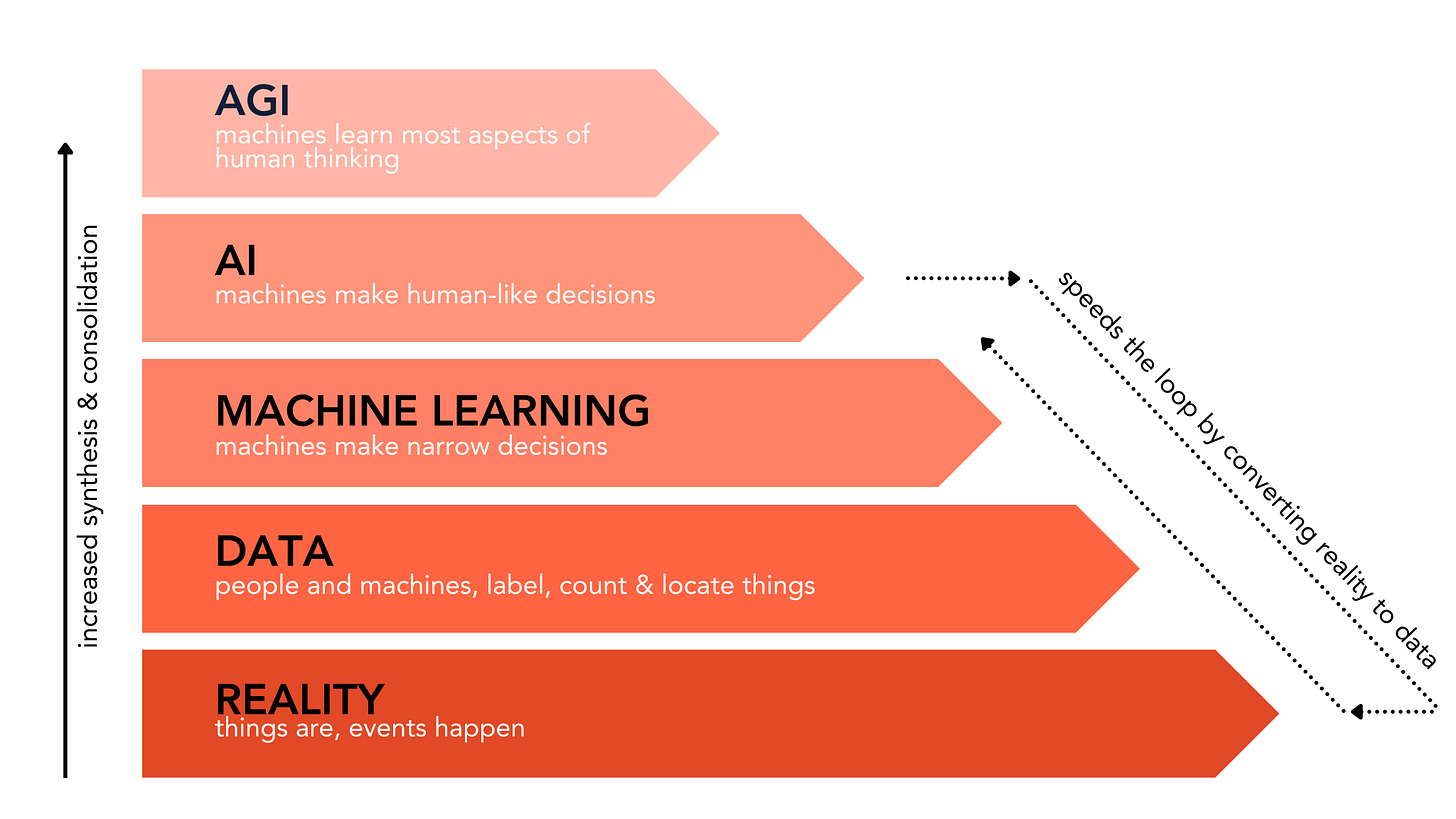

The Analytics Pyramid

What's the difference between AI, machine learning, and data?

AI is so hyped it seems only a matter of time until we have, like, AI soap. Folks are labeling all sorts of projects as AI. I don’t necessarily blame them. It’s difficult to answer the question: how do data, machine learning, and AI differ?

I was called upon to answer this question when speaking at a conference recently, and I couldn’t. (Okay, I wasn’t “called upon” to do so. But the chance was there and I watched the topic pass like a tennis player getting aced.)

I believe such a framework should be snappy enough to explain in a few seconds. It’s a common enough topic nowadays that you should be able to answer it simply.

After my conference flub I searched for a pre-existing definition… and came up empty. So I am herewith presenting my own.

There is a conceptual path leading from reality… through data… to machine learning… to AI. Let’s call it, the Analytics Pyramid.

Here is the short version:

In reality… things are, and events happen.

In data… people and machines label, count, and locate things.

In machine learning… machines make narrow decisions.

In AI… machines make human-like decisions.

In General AI… machines make human-like decisions in many areas.

There is a longer version. It involves Valentine’s Day, self-driving vehicles, and multiple references to psychedelics.

REALITY

At the base of the pyramid lies reality. In reality--to be as pithy as possible--things are. Events happen. For instance, if you go to the corner of El Camino Real and Castro Street in Mountain View, California, you will see some reality.

Mountain View, the heart of Silicon Valley, strikes the New Yorker’s heart with dread. Because it really is stucco suburban hell. And the restaurants are just okay.

At this particular corner, there is a print shop, and across the way, a Chase bank. The weather is always sunny. There is always parking. Yawn.

But that’s my cognitive filter. That’s all I see: Bank, sunny, available parking.

If a snarky New Yorker like me were to take a 1,000 mg dose of psilocybin and wander to this corner… why, then… the oak leaves overhead…the sandy stone of the print shop wall… the musically pausing and advancing traffic… would become sensory nirvana. Thanks to the drug, reality would be unfiltered. The multitude of nameable and unnamable objects, all that complexity and change, would be revealed.

DATA

Data is the application of a filter to reality.

Think about our most essential act of data-gathering: the Census. Someone at the Census Bureau has created a labeling scheme: age, education, housing, employment. Then census-takers knock on doors. Label the residents. Count them. In the 1800s the Census used to call their census-takers, enumerators.

Enumerators act as the bridge between an infinitely complex reality, and something we can analyze. Something as infinitely complex as a town (Mountain View) can be enumerated: population 82,376, median age 35.9 years.

Obviously this is a filtered, incomplete view of reality. In so many ways, data is the opposite of tripping. It is reductive. In this way, it is very human. Through a glass darkly, data peers out into the world.

And, the act of data-gathering serves a critical purpose. It, in effect, gazes out at unstructured things and, in its way, perceives them. Then records those perceptions in an internally coherent fashion. As such, data-gathering makes up the five senses for analysis. It perceives things and events and records them for processing.

GETTING MACHINES INVOLVED

But we can get machines to help us, even in this lower level of the pyramid where we’re still gathering data. Because our devices are “smart.” In this way, our devices, our machines, can also be enumerators. So we can be a little expansive in our description of the data layer in the Analytical Pyramid to say, “people and machines label, count and locate things.”

Consider the white Lexus RX450h approaching the print shop on El Camino Real on the afternoon of Valentine’s Day.

This particular Lexus is a five-thousand pound smart device. It’s part of Google’s self-driving vehicle program. It’s using cameras, RADAR, and LIDAR (“light detection and ranging”) to perceive the outside world of the car. The cluster of sensors sitting on top of the car. The car’s eyes.

MACHINE LEARNING, OR ANALYTICS

To jump up a layer in the pyramid, we will start using that data. If the data itself is like raw perceptual information, now it’s up to the models and algorithms to make sense of the data--to perceive the world at the corner of El Camino Real and Castro.

They start by doing this in a narrow way. They apply a score to the raw perceptual information, to link it to the idea of an object.

You might compare this to a familiar marketing scenario. For instance when a direct marketing model scores one home address, or one cookie, as the one most likely to respond to an offer.

You wouldn’t say the model is taking over from a human by doing this. Or that this is necessarily artificial intelligence. That model is making one narrowly defined decision. Good household to send a flyer to, or not?

Back inside the Lexus--which, by the way, is driving itself down El Camino Real at the appointed legal speed limit--the LIDAR is pinging away. It is perceiving three-dimensional space. It’s assigning volumetric pixels, or “voxels,” to the edges and peaks of three-dimensional spaces. Assigning labels to these objects is called classification. (Linking raw perceptual data to the idea of an object.)

But sometimes it’s hard.

Like, when the Lexus’s self-driving system tells it to drive into the intersection and make a right, and instead, the LIDAR is passing back information that there are all these weird oblong shapes in the road ahead.

The system consults the LIDAR, and now the RADAR and the cameras too. A new algo, clustering, kicks in, to use incomplete information to make a classification.

Got it.

Those are sandbags.

The lane is closed. Yikes. Now what?

The machine learning or algorithmic layer has done its job. It has taken unstructured perceptual data and made some important decisions. Like, “those are sandbags up ahead.” But for this Lexus to really act like a human and make decisions, more is required.

STACKING

An idea I’ll call stacking kicks in now. When I say stacking, I mean, as you go up in the pyramid, you’re synthesizing more data and more scores into concepts that factor in all those data and scores.

And it’s why the pyramid shape is apt. To mimic human thought--to be artificial intelligence--more input than “hey, man, that’s a sandbag” is required.

First the Lexus needs to decide to stop, and not drive into the sandbag. So it needs a decision matrix algorithm that predicts that if it drives into the sandbags, that’s striking a solid object. And striking solid object with car = bad.

Then it needs another input, namely a roadmap and a destination. Otherwise it might just say, You know this print shop is pretty great, maybe I’ll just stop here. I mean it’s 73 degrees and sunny, why not? And there’s so much parking.

The Lexus also needs GPS to remind it that there’s still a ways to go to its destination.

Finally it needs more perceptions and classifications from its LIDAR, RADAR and cameras to tell it how to get around this obstacle and complete its journey. To continue, the Lexus needs to merge into the left lane, and keep going on El Camino Real for another thirty feet, so it can complete the right turn onto Castro.

Now it needs to engage the vehicle’s “drive by wire” system, the tech that connects the decision matrix algorithms to the mechanics of the car.

THE AI LAYER

So we’re still stacking! Classified object + decision matrix + route + GPS + “drive by wire” system… all of this stacking, all these narrow decisions… they sum up to something bigger…. sum up to the human-like decision to…

…MERGE LEFT!

And this is where the accident happens.

There happens to be a city bus driving past.

The Lexus’s decision algorithm predicts that if the Lexus turns on its blinker, signaling a left turn… and a city bus is next to it… why, that city bus will yield!

Guess it didn’t have enough training data about cranky bus drivers. Even in Mountain View--forget about Madison Avenue--the buses don’t stop for nothin.

The Lexus pulls left and smashes into the city bus. And lo, the Valentine’s Day fender-bender has occurred, one of the first in the Google program.

This was some years ago, 2016. Not taking anything away from the artificial intelligence. Yes, the decision to merge left was wrong. But so are humans, often. No one was injured. And the sun still shone in Mountain View.

DYNAMICS - STACKING

I am describing a conceptual model. Its goal is to create a shorthand for normal people to think about how AI does its thing… what the difference is between AI and modeling/machine learning… and the role of data in the framework.

There are dynamics that make the conceptual model work.

The first is: the higher in the pyramid you go, the more sophisticated is the stacking. A computer science person might call this “abstraction.”

Reality has the most complexity.

Then you need to create data about that reality. Data takes some complexity out because it reduces reality to a few meaningful filters.

Machine learning applies mathematical models and predictive statistics to the data. It does this to make a single type of prediction, or to apply a particular score or decision, to your data. It decides that “this is a turning lane” and “this is a sandbag.”

AI combines many predictions and data points--stacks them--until they add up to a more sophisticated, human-like decision. Like, “I have not arrived yet. I need to merge left, so I can get past the sandbags; then make a right on Castro Street, so I can get to my destination.”

If we were to join the speculation about the ability for our species to create Artificial General Intelligence, it would merely be a case of more stacking. The AGI would not only learn to drive; it would learn to practice medicine; apply laws; make lunches for kids; invest in the stock market; crack jokes. It would know you offer cocktails before dinner and digestifs after. It would stack up human-like understanding about so many areas of human existence, that it would be a version of ourselves.

DYNAMICS - AI CREATES MORE DATA

The AI layer implies the ability for machine to make data. Why not assign an AI bot the ability to create data? Assign it the task to, for instance, create maps as it drives around a strange city? Thereby creating more data for the next Lexus to use? A fascinating part of the dynamic. Because it means that the cycle can be accelerated, by itself.

The more data we have, the more the machines have to chew on… so the machines can make more data.

In Alan Turing’s Intelligent Machinery, he imagines a sort of Rube Goldberg AI device roaming the countryside with a camera for a face and wheels for legs and little robot clamps for hands. But he acknowledges, “the creature would have no contact with food, sex, sport, and many other things of interest to a human being.”

Fair--but that was 1948. With so much of the world enumerated by three decades of smart devices, internet, and big data, it’s hard to imagine any nook of human endeavor not significantly documented with data. Or having the potential to be documented.

And then, it’s easy to imagine this AI Pyramid expanding its base of data through automated cycles of data gathering and interpretation. A million street corners as dull or delightful as El Camino Real and Castro, guessed at, and documented, through the shadows of human… and machine… understanding.